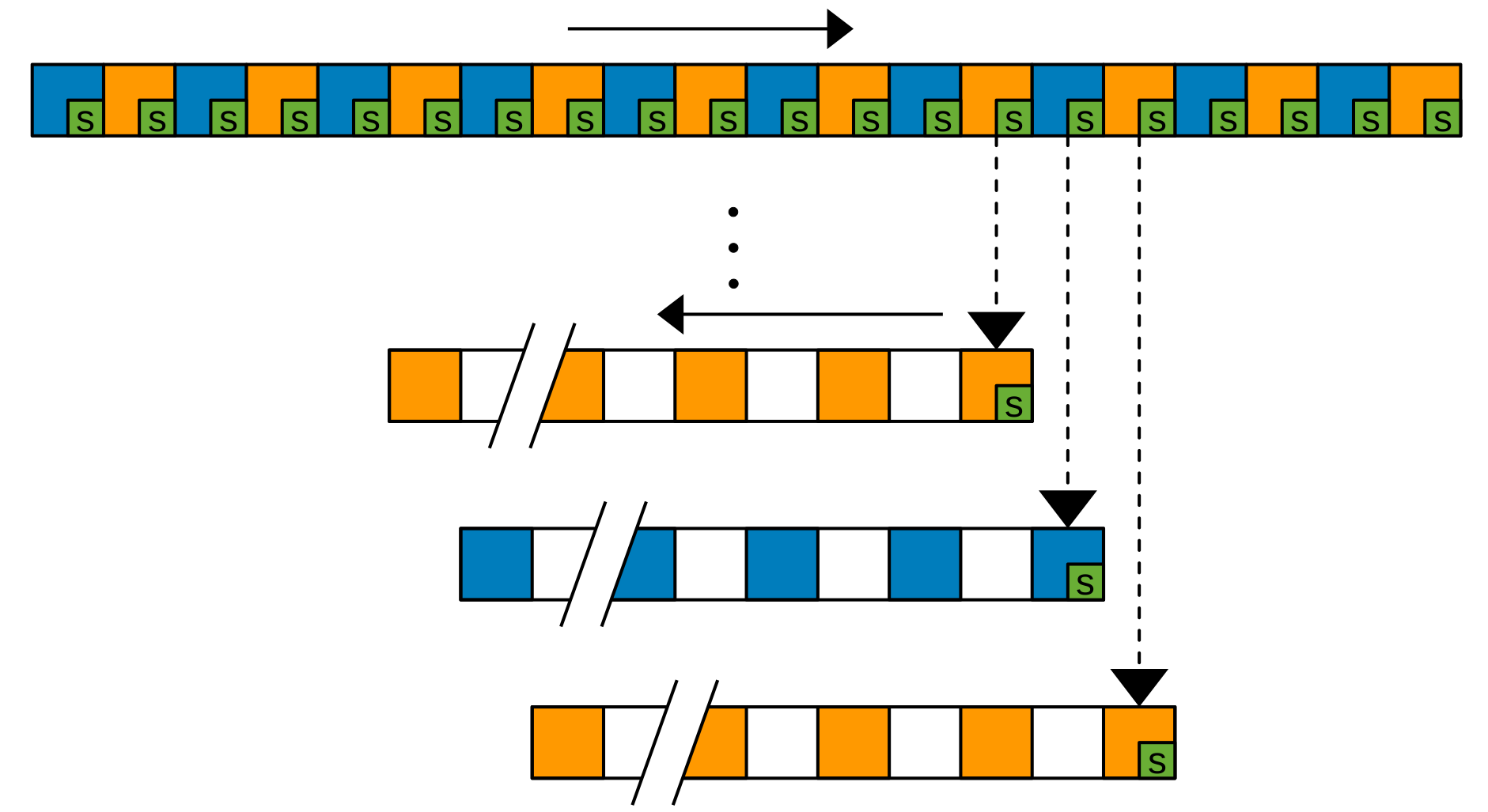

We demonstrate Deep Redundancy (DRED) for the Opus codec. DRED makes it possible to include up to 1 second of redundancy in every 20-ms packet we send. That will make it possible to keep a conversation even in extremely bad network conditions.

We demonstrate Deep Redundancy (DRED) for the Opus codec. DRED makes it possible to include up to 1 second of redundancy in every 20-ms packet we send. That will make it possible to keep a conversation even in extremely bad network conditions.

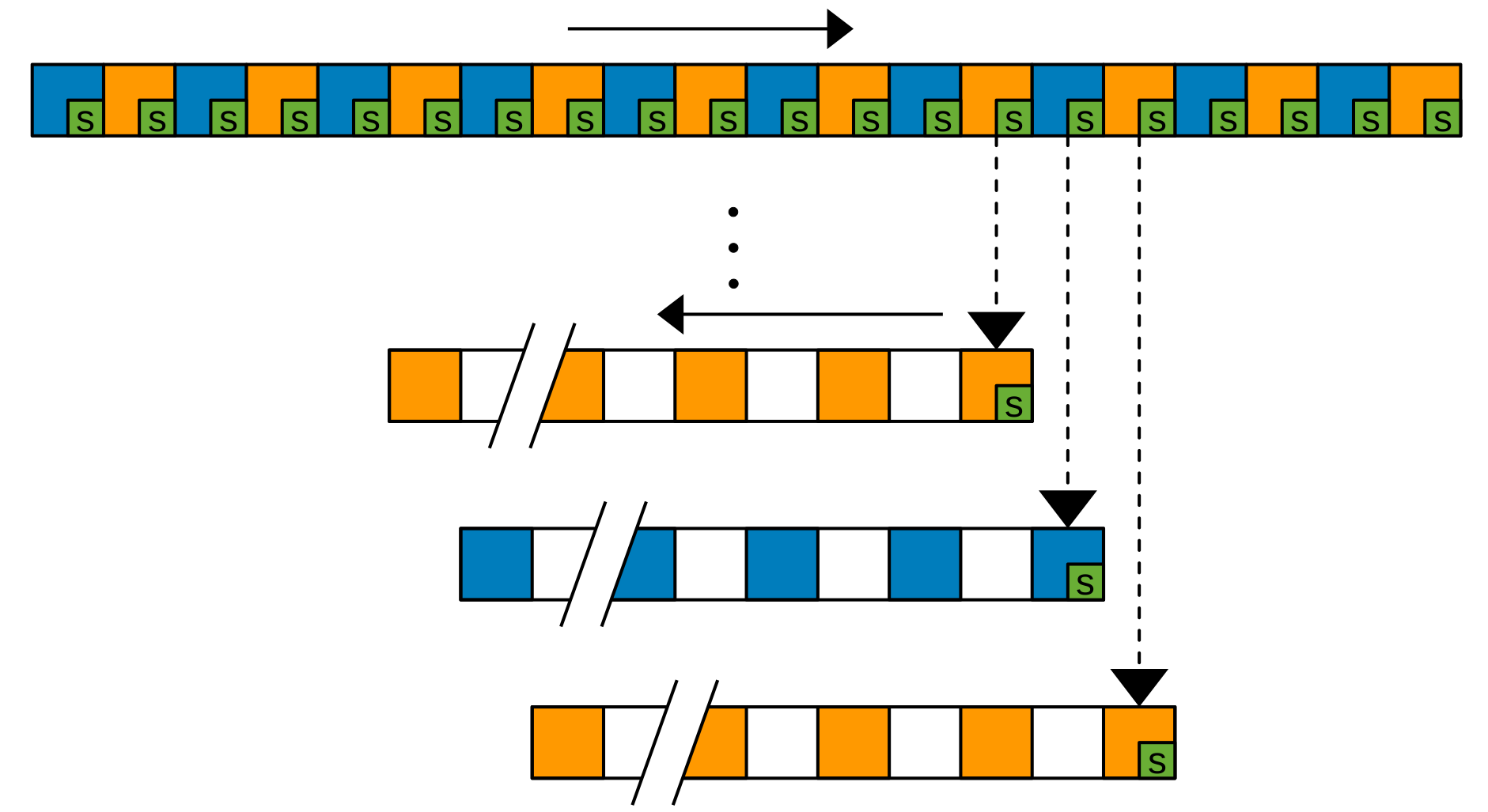

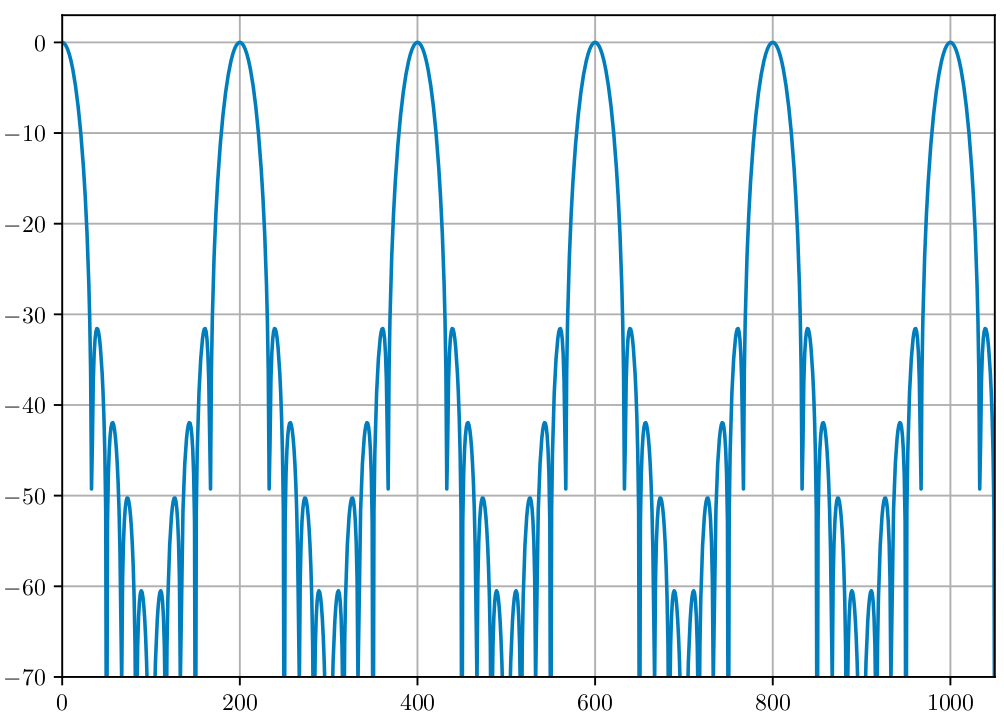

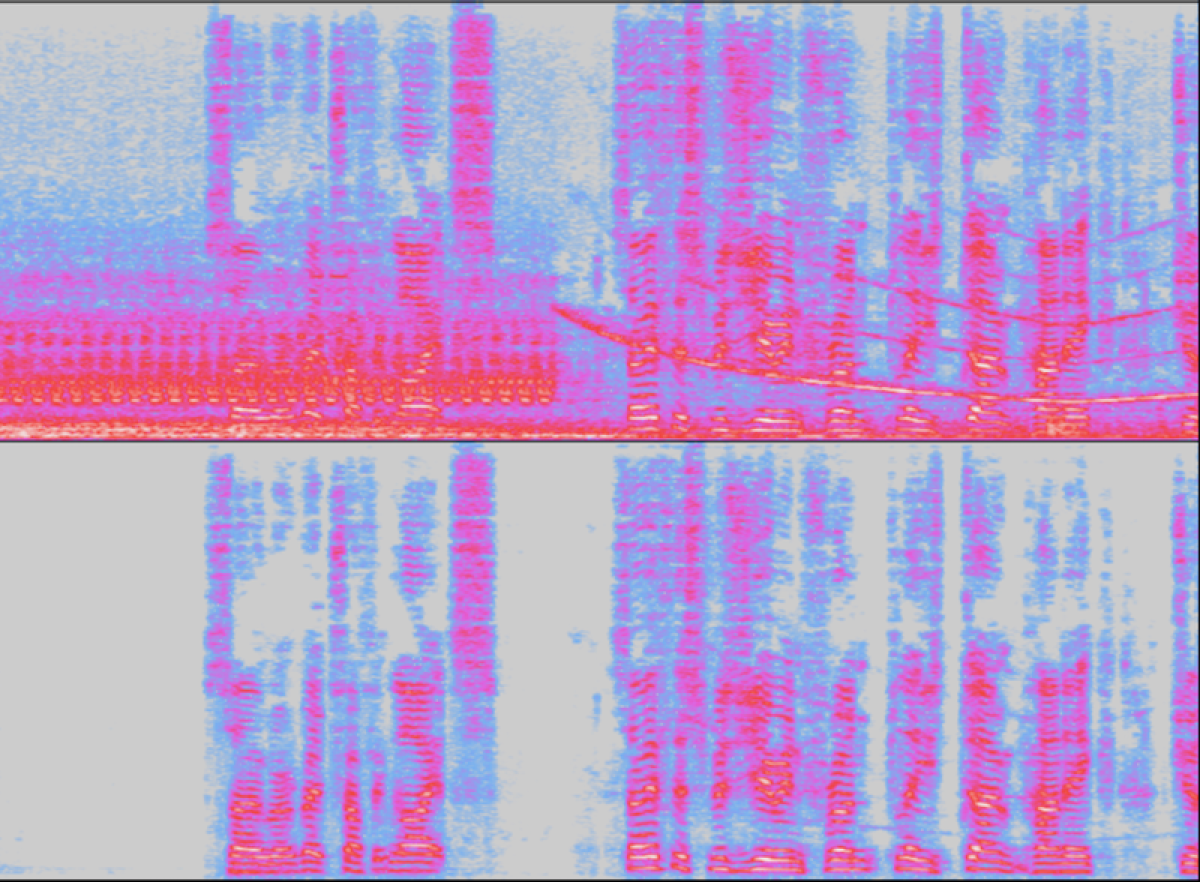

This demo introduces the PercepNet algorithm, which combines signal processing, knowledge of human perception, and deep learning to enhance speech in real time. PercepNet ranked second in the real-time track of the Interspeech 2020 Deep Noise Suppression challenge, despite using only 5% of a CPU core. We've previously talked about PercepNet here, but this time we go into the technical details of how and why it works.

This demo introduces the PercepNet algorithm, which combines signal processing, knowledge of human perception, and deep learning to enhance speech in real time. PercepNet ranked second in the real-time track of the Interspeech 2020 Deep Noise Suppression challenge, despite using only 5% of a CPU core. We've previously talked about PercepNet here, but this time we go into the technical details of how and why it works.

This is some of what I've been up to since joining AWS... My team and I participated in the Interspeech 2020 Deep Noise Suppression Challenge and got both the top spot in the non-real-time track, and the second spot in the real-time track. See the official Amazon Science blog post for more details on how it happened and how it's already shipping in Amazon Chime.

You can also listen to some samples, or read the papers: