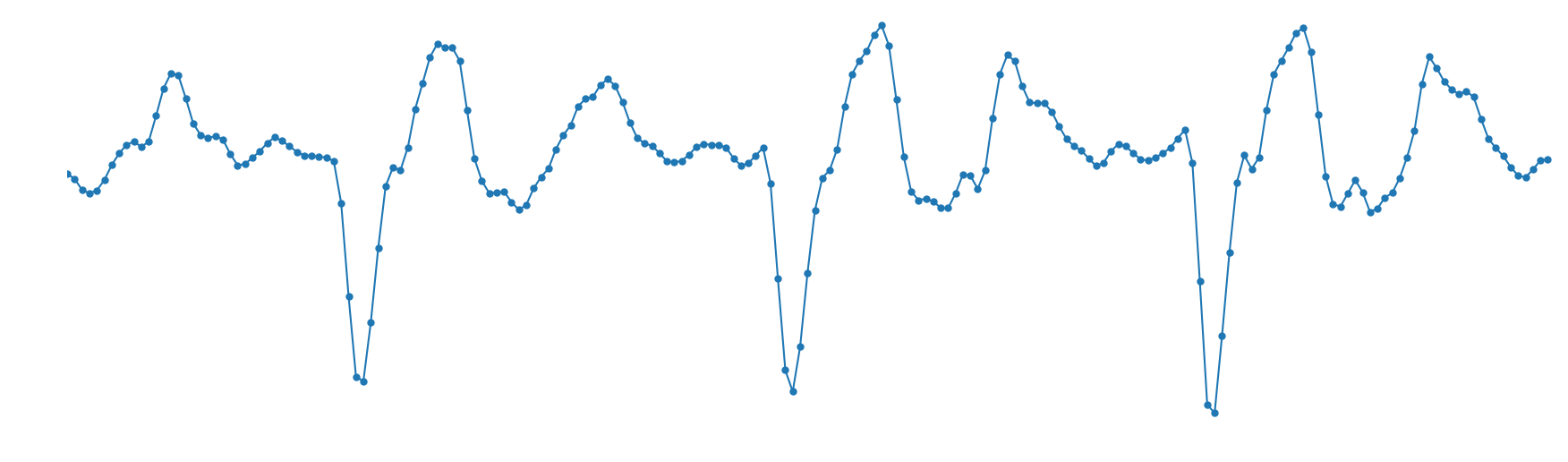

This new demo presents LPCNet, an architecture that combines signal processing and deep learning to improve the efficiency of neural speech synthesis. Neural speech synthesis models like WaveNet have recently demonstrated impressive speech synthesis quality. Unfortunately, their computational complexity has made them hard to use in real-time, especially on phones. As was the case in the RNNoise project, one solution is to use a combination of deep learning and digital signal processing (DSP) techniques. This demo explains the motivations for LPCNet, shows what it can achieve, and explores its possible applications.

About Using LPCNet to Noise Suppression

Date: 2019-06-03 06:28 am (UTC)I'm following your work from OPUS to RNNoise, and now to LPCNet.

As RNNoise have some noise residual. I'm trying to use LPCNet to do noise suppression as you pointed out in "So what can we use this for?" And I have some questions:

1. All I need to is using noisy speech (with some clean speech) to train a new model?

or

2. I use noisy speech to extract features(noisy features) and clean speech(before mix with noise) as target? That means training target become e = clean speech - predict. I have tried method 2, but the synthesis result are terrible...

Hope you can give me some advice. Thank you in advance.

Re: About Using LPCNet to Noise Suppression

Date: 2019-06-19 04:20 pm (UTC)Re: About Using LPCNet to Noise Suppression

Date: 2019-07-19 09:26 am (UTC)